I know it's a preview, but I want to use this every day.

For a company aimed at being the knowledge graph of the entire planet, image-based AI services are an obvious thing for Google to want to get right. And for anyone using Google Photos over the last couple of years, you know there have been huge strides made in enhancing these capabilities. Facial and object recognition in Google Photos, can be incredible, and there's a lot of good that can come from using these features in the real world. Being able to offer a camera with the ability to rapidly identify storefronts and street signs to visually impaired people alone is incredible.

Google Lens is headed in the right direction, but it's clearly not ready for daily use just yet.

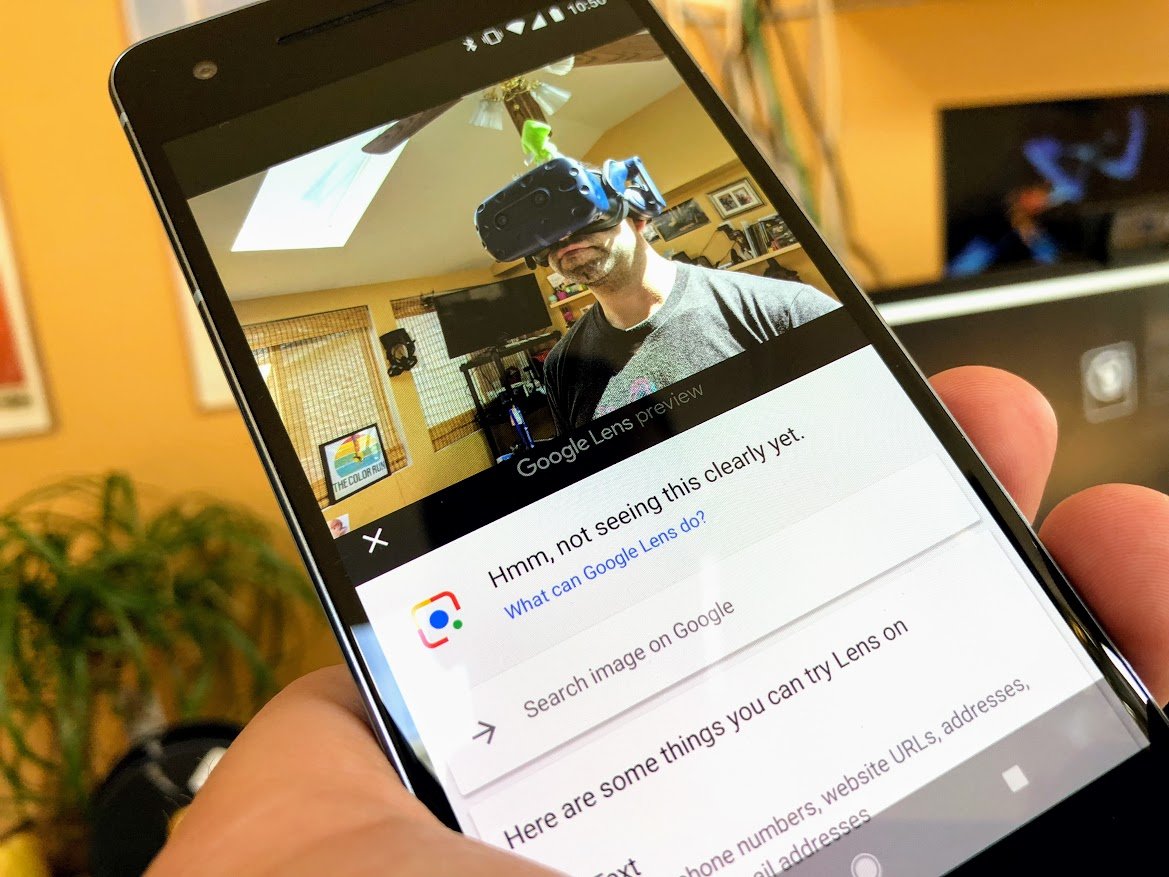

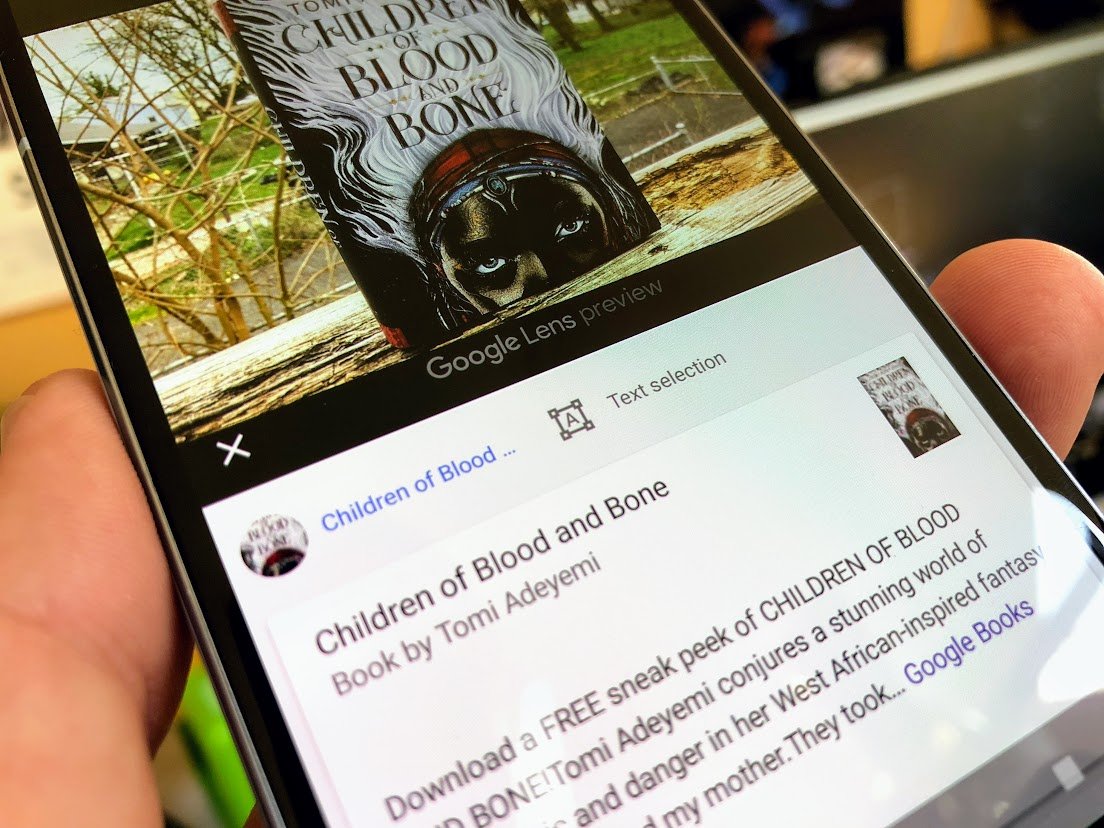

As a Google Pixel owner, I've had access to Lens for six months now. This beta period for Lens has been a little clumsy, which is to be expected. I point Lens at an unusual book a friend of mine had, and instead of telling me where I can buy that book for myself I get a text snippet from the cover identified. I ask Lens to scan a photo of a movie theater marquis, it has no idea what is in the photo and does not offer me the ability to but tickets for the show like it was supposed to. I take a photo of my Shetland Sheepdog, Lens identifies her as a Rough Collie. Alright, so that last one is nearly impossible to get right in a photo, but the point is Google Lens doesn't reliably do most of the things it claims to be able to do yet.

To Google's credit, the things Lens gets right it gets right fast. I love being able to use Lens for real-time language translation. Point Lens at a menu written in another language, you will get immediate translations right on the page as though you were looking at the menu in English the whole time. Snap a photo of a business card, Lens is ready to add that information to my contacts book. I've used individual apps for these features that have worked reasonably well in the past, but unifying these features in the same place I access all of my photos is excellent.

I'm also aware that this is still very early days for Lens. It says 'Preview' right in the app, after all. While Pixel owners have had access to the feature for half a year, most of the Android world has only had access to it for a little over a month at this point. And when you understand how this software works, that's an important detail. Google's machine learning information relies heavily on massive contributions of knowledge, so it can quickly sift through it all and use thing that have been properly identified to better identify the next thing. It could be argued Google Lens has only just begun its beta test, now that everyone has access to it.

At the same time, Lens was announced a full year ago at this point, and I still can't reliably point it at a flower and have it tell me which kind it is. It's a cool thing to have access to, but I sincerely hope Google is able to make this feature something special in the not-too-distant future.

from Android Central - Android Forums, News, Reviews, Help and Android Wallpapers https://ift.tt/2KefTPQ

via IFTTT

No comments:

Post a Comment